Xen virtualization can be a very effective method for large scale deployment of software agents in a virtualized network environment for testing applications’ scalability.

The first step you’d go if you were in the process of massively generating Xen domU would be to create a master virtual disk image and xen config file. A script that would clone this disk and configuration could then easily be written like this:

– Copy configuration file and disk image to a specific directory

– Edit configuration in order to adapt it to the new machines

– Launch the newly created domU

However, this process is suboptimal in many ways. First, each of the virtual machines that you’ve created will be using a copy of the master Xen drive image so a change to the system (i.e. software or distribution upgrade) would need to be performed on each domU individually. Also, the disk space requirements for such a setup can quickly become quite high, indeed, each domU needs a copy of the master disk image (typical Ubuntu deboostrap is around 700Mb).

One solution would be to use the same image file for all of the domU disks. However, a system, upon boot, needs a disk to which it can write. This is where things like a ramdisk or a second (smaller) virtual disk come handy. Yes but, how can you tell the system to write to this ramdisk instead of the shared disk image? Well, this is where unionfs (or aufs) filesystems come in handy. With these file systems, you can actually make two different partitions appear as a single one to the kernel.

For example; setups like the following can be achieved:

/dev/sda1 is 3Gb

/dev/sda2 is 300Mb

You can actually make it so that / is the union of both filesystems. For example, if either /dev/sda1 or /dev/sda2 contain the file /etc/fstab, then the resulting aufs file system will contain /etc/fstab. Furthermore, you can set it so that /dev/sda1 is read only and /dev/sda2 is read write. The hierarchy of aufs allows you to make it so that, if a file from /dev/sda1 is modified, it is written to /dev/sda2 and if a file is present on /dev/sda2, it has priority over the same file on /dev/sda1.

Now, how do you set that up for / ? As you know, the root of your system can hardly be remounted while the system has been booted. The idea is thus to prepare it (having / composed of two overlaid filesystems, one read only, the other read write) before that happens in an initramfs.

What follows works for Ubuntu 10.04 using the 2.6.32-24 kernel (as the latest one does not include the aufs module). I suppose that you have already deboostrapped a lucid ubuntu into a loop mounted filesystem image, chroot to the directory you mounted the image and do the following:

apt-get install aufs-tools

echo aufs >> /etc/initramfs-tools/modules

Next, you’ll need to add the script that will create the aufs hierarchy as

/etc/initramfs-tools/scripts/init-bottom/__rootaufs and chmod it as 755

This comes from the Ubuntu community wiki, I’ve adapted the script a little so that the read write parition is /dev/sda2

# Copyright 2008 Nicholas A. Schembri State College PA USA

#

# This program is free software: you can redistribute it and/or modify

# it under the terms of the GNU General Public License as published by

# the Free Software Foundation, either version 3 of the License, or

# (at your option) any later version.

#

# This program is distributed in the hope that it will be useful,

# but WITHOUT ANY WARRANTY; without even the implied warranty of

# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

# GNU General Public License for more details.

#

# You should have received a copy of the GNU General Public License

# along with this program. If not, see

# .

case $1 in

prereqs)

exit 0

;;

esac

export aufs

for x in $(cat /proc/cmdline); do

case $x in

root=*)

ROOTNAME=${x#root=}

;;

aufs=*)

aufs=${x#aufs=}

case $aufs in

tmpfs-debug)

aufs=tmpfs

aufsdebug=1

;;

esac

;;

esac

done

if [ "$aufs" != "tmpfs" ]; then

#not set in boot loader

#I'm not loved. good bye

exit 0

fi

modprobe -q --use-blacklist aufs

if [ $? -ne 0 ]; then

echo root-aufs error: Failed to load aufs.ko

exit 0

fi

#make the mount points on the init root file system

mkdir /aufs

mkdir /rw

mkdir /ro

# mount the temp file system and move real root out of the way

mount -t ext3 /dev/sda2 /rw

mount --move ${rootmnt} /ro

if [ $? -ne 0 ]; then

echo root-aufs error: ${rootmnt} failed to move to /ro

exit 0

fi

mount -t aufs -o dirs=/rw:/ro=ro aufs /aufs

if [ $? -ne 0 ]; then

echo root-aufs error: Failed to mount /aufs files system

exit 0

fi

#test for mount points on aufs file system

[ -d /aufs/ro ] || mkdir /aufs/ro

[ -d /aufs/rw ] || mkdir /aufs/rw

# the real root file system is hidden on /ro of the init file system. move it to /ro

mount --move /ro /aufs/ro

if [ $? -ne 0 ]; then

echo root-aufs error: Failed to move /ro /aufs/ro

exit 0

fi

# tmpfs file system is hidden on /rw

mount --move /rw /aufs/rw

if [ $? -ne 0 ]; then

echo root-aufs error: Failed to move /rw /aufs/rw

exit 0

fi

cat </aufs/etc/fstab

# This fstab is in ram and the real fstab can be found /ro/etc/fstab

# the root file system ' / ' has been removed.

# All Swap files have been removed.

EOF

#remove root and swap from fstab

cat /aufs/ro/etc/fstab|grep -v ' / ' | grep -v swap >>/aufs/etc/fstab

if [ $? -ne 0 ]; then

echo root-aufs error: Failed to create /aufs/etc/fstab

#exit 0

fi

# add the read only file system to fstab

ROOTTYPE=$(cat /proc/mounts|grep ${ROOT}|cut -d' ' -f3)

ROOTOPTIONS=$(cat /proc/mounts|grep ${ROOT}|cut -d' ' -f4)

echo ${ROOT} /ro $ROOTTYPE $ROOTOPTIONS 0 0 >>/aufs/etc/fstab

# S22mount on debian systems is not mounting /ro correctly after boot

# add to rc.local to correct what you see from df

#replace last case of exit with #exit

cat /aufs/ro/etc/rc.local|sed 's/\(.*\)exit/\1\#exit/' >/aufs/etc/rc.local

echo mount -f /ro >>/aufs/etc/rc.local

# add back the root file system. mtab seems to be created by one of the init proceses.

echo "echo aufs / aufs rw,xino=/rw/.aufs.xino,br:/rw=rw:/ro=ro 0 0 >>/etc/mtab" >>/aufs/etc/rc.local

echo "echo aufs-tmpfs /rw tmpfs rw 0 0 >>/etc/mtab" >>/aufs/etc/rc.local

echo exit 0 >>/aufs/etc/rc.local

mount --move /aufs ${rootmnt}

exit 0

Once this is done, update the initramfs using:

update-initramfs -u

Exit the chroot and copy the newly generated initrd as well as the corresponding kernel outside the chroot (so you can have it available to xen on its filesystem).

Now, in the xenconfig for the domU you generate, you’ll need to pass aufs=tmpfs on the kernel line and reference the initrd that you copied out of the chroot. Be sure that the domU has two disks, sda1 (read-only) pointing to the disk image that will be shared by all, and sda2 which is a small (100Mb ?) disk image to which changes will be written. Also, you’ll want sda1 to be attached read-only to the machine so it can be attached to several domU simultaneously.

Depending on the number of machine instances you want, you’ll also want to increase the maximum number of loop mounted file systems on the host, this can be done by editing /etc/modules and adding options loop max_loop=64 (or any other value you like). Be sure to rmmod and modprobe loop again or reboot the host so the change is effective.

There you go, you should now have multiple domU virtual machines as Xen guests fully functional but sharing the same core disk image. Also, you might want to have IP addresses distributed by a dhcp server in a coherent way by generating the MAC address of the domU config file, the machine hostname can easily be customized by a kernel parameter that you add (following the aufs=tmpfs parameter) and as you certainly might want to have an ssh server running on each host, be sure that you remove the ssh host keys and add a dpkg-reconfigure openssh-server at the end of /etc/rc.local so they are generated on first boot (they’ll be stored on the read write partition).

Enjoy!

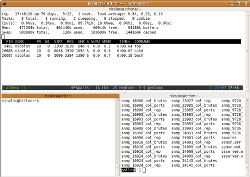

Terminator is a gnome app which is an extension of the gnome-terminal application in order to integrate features that screen has. You start with a plain terminal, when you need another one, you go for a quick CTRL+SHIFT+o or CTRL+SHIFT+e to split it in half either horizontally or vertically. After opening a few you navigate between them by using CTRL+SHIFT+p and CTRL+SHIFT+n for respectively going to the previous and next one. Should you need extra space for a few moments to focus on something, you can expand the current terminal so it occupies the whole window by simply doing a CTRL+SHIFT+x, and there is a ton of other great features which I use less often.

Terminator is a gnome app which is an extension of the gnome-terminal application in order to integrate features that screen has. You start with a plain terminal, when you need another one, you go for a quick CTRL+SHIFT+o or CTRL+SHIFT+e to split it in half either horizontally or vertically. After opening a few you navigate between them by using CTRL+SHIFT+p and CTRL+SHIFT+n for respectively going to the previous and next one. Should you need extra space for a few moments to focus on something, you can expand the current terminal so it occupies the whole window by simply doing a CTRL+SHIFT+x, and there is a ton of other great features which I use less often.